From Intuition to Evidence: Clean Evidence for Problem-Validation (part 3)

A practical guide to collecting evidence that holds up under scrutiny and keeps your product decisions grounded in reality.

Every founder collects information. Few gather clean evidence.

What you will get here: a practical way to capture signals you can trust, with a simple form, a signal ladder, and an interview flow you can run this week.

Everything to this point structures your intuition so you can state the problem clearly. But here’s the dirty truth of founder intuition: without clean evidence, intuition remains an assumption. Even if the assumption is directionally correct, evidence adds the conditions and nuance. With evidence, your goal is to learn:

When the problem actually shows up (contexts, triggers, frequency).

For whom it’s truly painful (segment, role, maturity).

What “success” really means for that stakeholder (outcome definition).

Which edge cases matter (and which to ignore for v1).

Intuition gives you the direction; evidence gives you the details.

What constitutes clean evidence?

Clean evidence is behaviour-backed, bias-aware, repeatable, and can be obtained using the following techniques:

Unbiased discovery: problem interviews with the people feeling the pain; no pitching, no leading questions.

Observed behaviour: concierge runs, prototype walk-throughs, workflow shadowing.

Willingness signals: time invested, data access granted, or money committed (pilot, LOI, pre-pay).

Triangulation: the same pains and blockers recur.

Replicability: signals hold with new people in the same segment.

However, it is important to recognise that not all evidence is treated with the same level of importance, and understanding the nuance that exists between evidence types will ensure that you do not accidentally confuse weak signals for strong signals.

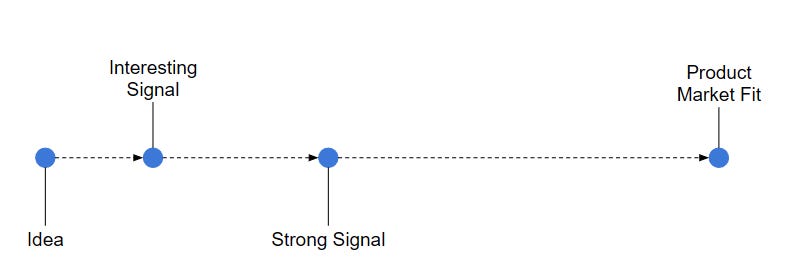

Here is how experienced product leaders read signals on the path from idea to fit.

Idea

You have a hypothesis and early anecdotes. Output is a clear problem statement and a first stakeholder map.

Interesting signal

You hear positive statements from customers, and the same pain is repeated from multiple people. You may even see some preliminary interest in the form of light actions.

Example customer statements: “I like it.” “Looks useful.” “Send me more info.” “We would use this.”

Example behaviours: unprompted pain in interviews, users book a session, share a small dataset, agree to try a clickable prototype.

Strong signal

You see behaviour change and real commitments in either time or money.

Example customer statements: “We are using it to achieve [goal]” “it cut our time by about 30%” “can we run a pilot?”

Example Behaviours: paid pilot or LOI, pre-pay, repeated use of a concierge/prototype, clear leading-indicator lift (time saved, error rate down, conversion up).

Product-market fit

You start to notice that multiple users are regularly using your product to achieve their goals and there is a reliable pull and renewals within one segment.

Example Customer statements: “We use it regularly to hit [goal].” “We cannot run X without it.” “We renewed/added seats.”

Example behaviours: regular use without chasing, multiple teams ask for access, renewals or expansions happen on schedule.

The importance of reading signals correctly

It is important to delineate between signal strengths as it is often this confusion of weak and strong signals that leads founders to building something they think is valuable, but in actuality needs additional validation to become market ready. A way to resolve this is to set a minimum bar to continue.

Minimum bar to continue

From 5–10 people in one segment, you have:

at least 2 actions (booked session, data shared, trial usage), and

at least 1 commitment (LOI, paid pilot, pre-pay) or a clear leading-indicator lift (for example, time saved ≥ 30%).

If you miss the bar, tighten the segment, sharpen the problem, or stop.

Common misreads and fixes

“I like it” is not traction. Ask for a calendar slot or a commitment that moves them along the sales funnel.

One success is not PMF. Look for repeated behaviour across people and weeks.

Hand-held trials are not use. Count unsupervised usage.

Enthusiasm without budget is weak. Ask who signs and what budget it comes from.

Mixed segments break replication. Validate within one segment before you broaden.

The Strong → PMF Gap

You may have also noticed that the gap between strong signals and PMF is significantly wider than the gap between weak signals and strong signals. The reason for this is simple. Strong signals show local fit. PMF means the fit holds across varied contexts, roles and weeks, and - most importantly - without your initiation. One team’s success does not guarantee another team’s success unless the conditions match.

A simplified system for documenting evidence

While there are several robust systems for capturing and documenting evidence (something that I will write about in a later post), a simple way to organise evidence is to use a single page (or form submission) per item. You can spin this up in Google Forms or Notion to make capture fast and consistent.

For ad-hoc notes, links, emails, call snippets fill in this form below:

# Evidence Log (single item)

Title:

Evidence type: (User | Competitor | Industry)

Stakeholder/segment:

Date & method: (interview | prototype | concierge)

Top observations (max 3, with quotes/timestamps):

Source link(s): (recording, deck, doc)

Unprompted pain? (Yes/No)

Willingness signal (if any): (time | data | money)

Submitter:Example:

Title: Monday tracker noise blocks CMO deck

Evidence type: User

Stakeholder/segment: Agency research leads, brand trackers (mid-market)

Date & method: 3 Oct, interview

Top observations:

“I spend hours cleaning weird spikes and I’m never sure if they’re fraud or real.”

Friday data pull is the pinch point; two KPIs spike most often.

They export to Excel because current tool flags but does not explain anomalies.

Source links: Zoom recording 34:10, notes doc

Unprompted pain? Yes

Willingness signal: Shared sample dataset; booked a 45-minute prototype review next week

Submitter: DL

A simplified system for interviewing

When you are capturing evidence from formal interviews, use this:

Who/When: stakeholder (+ segment), date, method (interview/prototype/concierge)

Top 3 observations (bullets with quote/time; no solutions)

Emerging Opportunity (optional): “Users struggle to ___ because ___.”

Evidence Type / Audience (same pickers as above)

Links: transcript/recording/artifacts

(Optional) Willingness signal: Data access / LOI / time invested (Y/N)💡Tips to maximise this approach

Do

Capture what you saw/heard; use their words where possible.

Add links to source materials/raw evidence to keep an auditable trail.

use bias busters

Replace “Would you use X?” with “Tell me about the last time Y happened.”

Replace “Do you like this?” with “What would you do next here?”

Replace “Would you pay?” with “Who signs off and what budget does this come from?”

Don’t

Pitch or propose a solution in the capture.

Merge personas (“Franken-customer”). Identify one stakeholder class and write evidence from their perspective.

Keep a lightweight Evidence Log (one card/row per submission). The product person processes each item into Insights or Opportunities and then clusters them into associated problems to be tackled together.

Replication rule: Aim for 5–10 conversations or tests within one segment showing the same top pain before you invest engineering time.

💡Tip: When defining a market, start narrow (one role, one job, one context) and only broaden when clean evidence shows homogeneous behaviour.

Do this next (10 minutes)

Create a Notion database from the Evidence Log template above.

Book 3 interviews in one segment this week using the outline.

Define your minimum bar to continue from the signal ladder and write it at the top of your log.

Next: From Intuition to Evidence: Building Ruthless Focus (Final Part)

Previous: From Intuition to Evidence: Harnessing the power of Founder Intuition (part 2)